Pricing Models for AI Products

As Artificial Intelligence (AI) gains momentum, a diverse array of companies are seizing the opportunity to develop innovative AI-based products. Recently, I’ve had the privilege of discussing revenue models and monetization strategies with several of these organizations.

While traditional pricing strategies are fundamentally relevant, AI products require careful consideration of specific factors to determine the most effective pricing approach.

This article aims to outline the various pricing models applicable to AI products, providing insight into the suitability of each model under different circumstances.

A definition of AI product for the sake of this analysis

In this context, the term AI Product encompasses any solution that leverages AI as its core element to deliver services or significantly benefits from AI to enhance its value proposition. Whether it’s through the direct application of AI technologies or integrating AI as a foundational component, these products are under the spotlight.

Looking for an effective way to learn how price your product? Have a look at our Pricing Fundamentals Courses.

Not all AI products are born equal

As always, the first question to address while working on pricing is: what value are we delivering (to whom)?

The way AI products can be used is very different. Some AI products aim at making people more productive, or even replacing people; in some other cases, they help create something that was just impossible before. Moreover, in terms of consumption, some AI products are thought to be used directly by an end user, while others represent platforms (more or less abstracted) on which other products are built (often via API).

On the other hand, and for the sake of our analysis, we are less interested in the technical way in which these products are built or delivered; whether it’s Large Language Models or more “traditional” Machine Learning models, we are interested in the Job these tools perform (and the value for the customers and the users), more than on the exact nature of the algorithms used.

With this consideration in mind, we divide AI Products into several categories:

- Companion Products: These are tools designed to augment human capabilities, offering insights, automating tasks, or providing access to knowledge that elevates individual performance. For instance, AI-powered assistants in the healthcare sector can help clinicians diagnose diseases with higher accuracy and speed. Word processors with integrated spelling correctors or generative assistants also fall into this category.

- Replacements: This category aims to automate roles traditionally filled by humans, potentially reshaping job landscapes. In an assembly line where production scraps were historically identified by a human, the use of AI can reduce or even eliminate the need for human workers

- Enablers: Platforms that empower other products or services by abstracting complex AI functionalities. OpenAI’s suite of APIs, including those powering ChatGPT, exemplifies how enabler products can provide developers with powerful tools to incorporate advanced AI capabilities into their applications without requiring deep expertise in machine learning.

Pricing Models

As the name suggests, companion products are thought of as tools that work “hand in hand” with their user. Within this category, we mainly observe two pricing models:

- Standalone Products: These are tools that perform a “full job” on their own; in other words, “assistants” (such as the famous Chat GPT) the end user interacts directly with. Because of this direct interaction and of a “1 to 1” relationship with the customer, these tools adopt predominantly a per-seat pricing model, mirroring traditional software licensing. Despite the billing model (Value Unit, in this case “per seat”) a complex question resides with the identification of the value (and hence, the price) for the user. Of course, the broader the service provider (think, Chat GPT) the more complex it is to identify univocally a price that represents a “fair value” across the customer base, and across the different use cases.

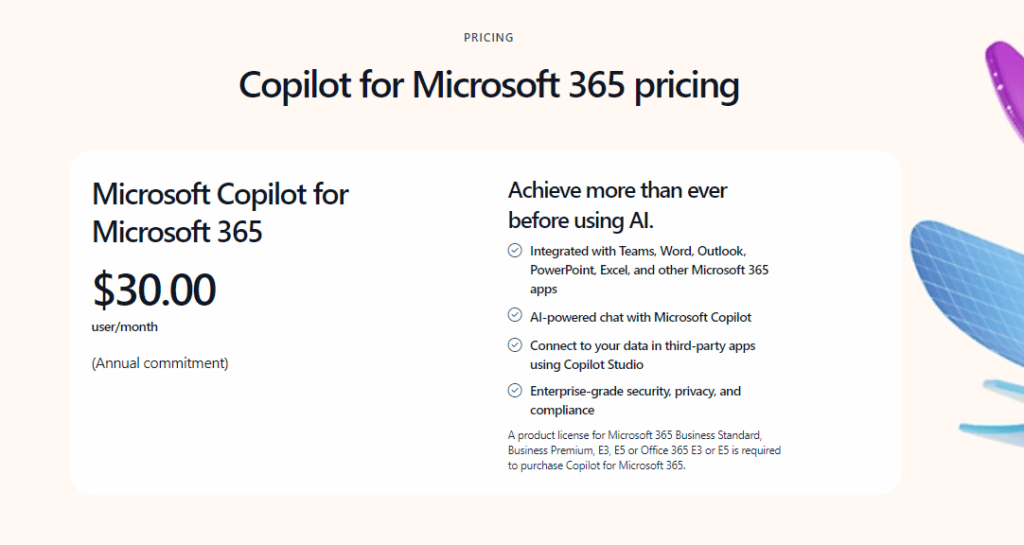

- Bundling with existing products: The situation is slightly different when, still in a “1 to 1” usage pattern, the AI tool is integrated into an existing (or new) broader product. Think about Microsoft Co-Pilot, which makes it easier to write documents in Microsoft Word. The “core product” is still Microsoft Word, whereas Co-Pilot enhances its performance thanks to AI. Therefore, Co-Pilot and similar products can be monetized through bundled pricing, as different product tiers, or as add-ons. Moreover, the AI element building “on top” of mandatory elements (eg. available only on a Premium plan), can work as “product fences” and help to increase the take rate of the most expensive plans (see the https://reasonableproduct.com/tiered-pricing-canvas/).

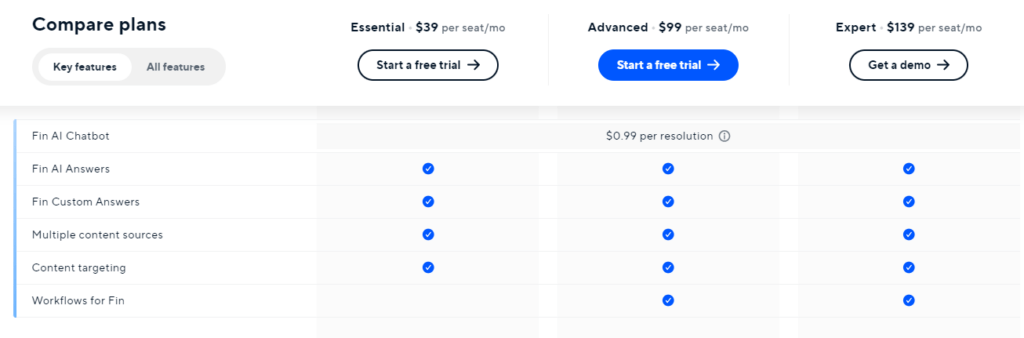

- Replacement Products: The pricing strategy here often centers on the value delivered through automation and efficiency gains. For instance, a company deploying AI for process automation in manufacturing might price its solution based on the cost savings and productivity improvements it delivers, compared to human labor. However, a special element of attention exists on the pricing unit if the overall goal is to enable customers to do more with fewer users, a “per seat” pricing will likely be very hard to scale. For instance, Intercom, one of the industry leaders in the Ticketing & User Chats space, monetized per-seat the existing operators, and “per solution” (aka per “end chat”) when there is no operator because AI has taken over.

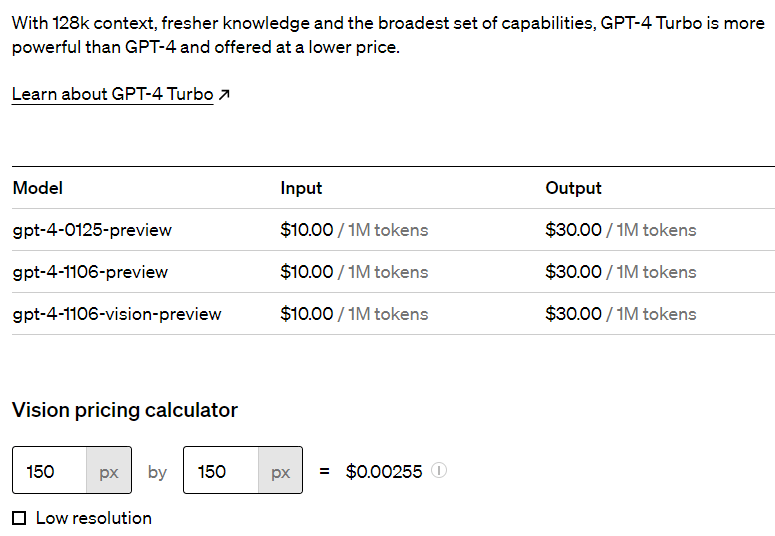

- Enabler Products: Given their foundational role, usage-based pricing models are common, where charges are correlated with API calls or the volume of data processed. This approach not only makes it accessible for a wide range of applications but also aligns costs with the perceived usage derived from the service. It’s important to notice that we said usage and not value: a higher usage is a good proxy to the stickiness of a product (i.e. how “deep” it’s integrated into the customer processes) but not necessarily to its value. In other words, a big risk exists when adopting “usage-based pricing” for AI enabler services, that the overall pricing model will fall back to a “cost plus” model (more about this later).

Risks of a blind adoption of “big players” pricing models

Adopting the pricing models of industry giants as a default strategy can be tempting for many organizations. Per-seat billing for AI-powered companion apps or ‘per API call’ invoicing for enabler platforms has almost become a standard, and I’ve seen a certain number of companies blindly going in this direction, no questions asked.

However, this assumption overlooks the vast differences in the core assumptions and the strategy that drives them (more about this later). While the pricing models employed by these giants may serve as valuable case studies, they are not one-size-fits-all solutions.

Another prevalent risk in the industry is the tendency towards a “mix and match” strategy, particularly among startups and smaller businesses. This approach involves cobbling together elements from various pricing models observed in the market, for instance mixing up per seats and per usage billing: A “mix and match” strategy often lacks coherence, making it challenging for customers to understand what they are paying for and why. Moreover, chances are that these structures fall short of capturing how customer segments perceive value, leading to pricing that does not accurately capture the value delivered or leaves money on the table.

Emulating the pricing models of industry giants indeed has its advantages, primarily because it follows a tried and tested path. The selling company and its customers often find comfort and reassurance in familiar pricing structures that successful, large-scale companies have validated. This approach can reduce the friction in the sales process, as customers are already accustomed to and understand the pricing model, which can lead to quicker decision-making and adoption.

Moreover, leveraging known pricing strategies from industry leaders can help new or smaller companies align themselves with market expectations, providing a sense of reliability and stability to potential customers. It also simplifies the explanation of the value proposition, as the pricing model doesn’t become a barrier to understanding. This can be particularly beneficial in sectors where trust and clarity are paramount to securing business relationships.

However, companies must ensure that while they may adopt similar pricing models as larger players, they should also consider their unique value propositions, cost structures, and target market needs. Tailoring the adopted pricing model to fit these specific aspects of their business will help maximize its effectiveness and ensure long-term sustainability.

As always, this all starts with a clear customer (and use-case) segmentation, a solid pricing strategy… and only then working out pricing models, value units, and price tags (see the “Product Monetization Canvas” for a framework helping you address these questions in the right order)

Considerations on Value

One of the most significant risks in adopting a simplistic pricing model is leaving money on the table by not fully appreciating and capitalizing on the value delivered to customers. Value-based pricing, by its very nature, requires a deep understanding of the worth of the services provided to the user, emphasizing perceived value over production costs, which is undoubtedly very hard to do for “general purpose” services as many of the AI products we observe.

This approach necessitates a careful segmentation of customers and use cases. It’s crucial to ensure that pricing strategies do not inadvertently lead to cannibalization within your offerings or result in undercharging customers who may have a higher willingness to pay or who derive more significant benefits from your solution. Properly identifying and segmenting these customer groups allows for more tailored pricing strategies that more accurately reflect the value delivered to each segment.

Taking OpenAI API pricing as a case in point: their tiered pricing model appears to be a step in the right direction, with billing primarily based on usage and model complexity. At first glance, this approach suggests a logical correlation: more usage and higher complexity models imply more value. However, this raises a pivotal question: are higher volumes of queries and the employment of more complex models reliable indicators of the value provided to the customer? Does a more complex model, often priced higher ‘per token,’ always equate to more value for a given application?

While it’s undeniable that increased usage and complexity entail higher operational costs for the provider, equating these factors directly with customer value can be misleading. The assumption that more queries or complexity automatically translates to more value for all customers overlooks the nuanced ways in which value is perceived and experienced across different use cases and sectors.

For instance, a few precise queries could deliver immense value to a niche market, potentially more so than a larger volume of generic queries might offer to a broader audience. Similarly, the complexity of a model does not inherently guarantee that the outcomes will be more valuable to every user; the critical factor is whether the complexity addresses a specific need or challenge the customer faces.

Therefore, while cost-driven factors like query volume and model complexity are essential considerations, they should not be the sole determinants of pricing in a value-based model. Instead, a more holistic understanding of how each customer segment perceives and derives value from the AI product is paramount. This might involve developing more sophisticated metrics for value assessment, engaging directly with customers to understand their needs and how they benefit from the AI solution, and continually refining pricing models to align more closely with the value delivered.

Considerations on Costs

The allure of value-based pricing lies in its focus on the customer’s perceived value rather than the seller’s cost structure. This strategy is particularly potent in the digital domain, where marginal costs tend to be negligible, paving the way for substantial profit margins. Nevertheless, AI products are distinct in their reliance on advanced hardware and significant energy consumption, making operational costs a non-trivial factor in pricing decisions.

Despite the inclination towards value-based pricing, ensuring a positive margin remains crucial. This is especially true for offerings not directly tied to usage metrics, where fixed quotas or per-seat licenses predominate. Regularly monitoring consumption patterns is essential, not only to prevent abuse but also to adjust pricing models dynamically in response to evolving usage trends and costs.

Pricing strategy first, pricing model later, pricing points last

When helping companies rethink their prices globally, I usually summarize the exercise as: “pricing strategy first, pricing model later, pricing points last”. You can have a look at the Product Monetization Canvas I prepared to get some help addressing these key topics in the right order.

This is particularly pertinent in the rapidly evolving AI market, where everybody seems to be doing “something” with AI, even though their contexts and motivations can greatly vary.

Looking again at the two mainstream LLM platforms, the competition is not solely about profitability but about securing a foothold in the market by establishing foundational AI technologies, such as LLMs offered by OpenAI, Google’s Bard, and others, as industry standards. The primary focus is on adoption and growth, leveraging pricing as a tool to facilitate widespread use and integration of these technologies into businesses.

The simplicity of the current pricing models adopted by these leaders serves a dual purpose: it lowers the barrier for businesses to adopt these cutting-edge technologies, while simultaneously ensuring a steady revenue stream for their creators. This approach underscores the strategic intent to prioritize market penetration and user base expansion over immediate financial gains.

At the same time, while Cost plus is usually not an optimum model (unless you’re forced to), it’s important to recognize that the amount of the markup makes the difference. In the case of OpenAI, it allowed them to hit $2 billion in revenue in 2023.

My name is Salva, I am a product exec and Senior Partner at Reasonable Product, a boutique Product Advisory Firm.

I write about product pricing, e-commerce/marketplaces, subscription models, and modern product organizations. I mainly engage and work in tech products, including SaaS, Marketplaces, and IoT (Hardware + Software).

My superpower is to move between ambiguity (as in creativity, innovation, opportunity, and ‘thinking out of the box’) and structure (as in ‘getting things done’ and getting real impact).

I am firmly convinced that you can help others only if you have lived the same challenges: I have been lucky enough to practice product leadership in companies of different sizes and with different product maturity. Doing product right is hard: I felt the pain myself and developed my methods to get to efficient product teams that produce meaningful work.